Welcome to a tutorial on how to create a simple web scraper in Python. So you need to fetch some data from another webpage in Python?

A simple web scraper in Python generally consists of 2 parts:

- Using

requeststo fetch the webpage. - Using an HTML parser such as

BeautifulSoupto find and extract data from the page.

That should cover the basics, but just how does it work exactly? Read on for the example!

TABLE OF CONTENTS

DOWNLOAD & NOTES

Here is the download link to the example code, so you don’t have to copy-paste everything.

EXAMPLE CODE DOWNLOAD

Just click on “download zip” or do a git clone. I have released it under the MIT license, so feel free to build on top of it or use it in your own project.

SORRY FOR THE ADS...

But someone has to pay the bills, and sponsors are paying for it. I insist on not turning Code Boxx into a "paid scripts" business, and I don't "block people with Adblock". Every little bit of support helps.

Buy Me A Coffee Code Boxx eBooks

PYTHON WEB SCRAPER

All right, let us now get into the details of the Python web scraper.

QUICK SETUP

- Create a virtual environment

virtualenv venvand activate it –venv\Scripts\activate(Windows)venv/bin/activate(Linux/Mac) - Install required libraries –

pip install flask requests beautifulsoup4 - For those who are new, the default Flask folders are –

staticPublic files (JS/CSS/images/videos/audio)templatesHTML pages

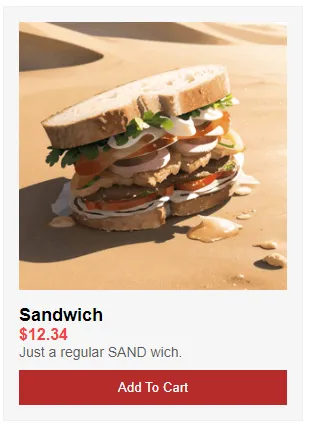

STEP 1) DUMMY PRODUCT PAGE

<div id="product">

<img src="static/sandwich.png" id="pImg">

<div id="pName">Sandwich</div>

<div id="pPrice">$12.34</div>

<div id="pDesc">Just a regular SAND wich.</div>

<input type="button" value="Add To Cart" id="pAdd">

</div>

First, we need a web page to work with. Here’s a simple dummy product page, we will use the web scraper to extract the product information from this page.

STEP 2) PYTHON WEB SCRAPER

# (A) LOAD REQUIRED MODULES

import requests

from bs4 import BeautifulSoup

# (B) GET HTML

html = requests.get("http://localhost").text

# print(html)

# (C) HTML PARSER

soup = BeautifulSoup(html, "html.parser")

name = soup.find("div", {"id": "pName"}).text

desc = soup.find("div", {"id": "pDesc"}).text

price = soup.find("div", {"id": "pPrice"}).text

image = soup.find("img", {"id": "pImg"})["src"]

print(name)

print(desc)

print(price)

print(image)(venv) D:\HTTP>py S2_scrape.py

Sandwich

Just a regular SAND wich.

$12.34

static/sandwich.pngYep. That is pretty much all it takes to scrape a website in Python.

- Load the required modules.

- Use

requests.get(URL).textto get the web page as text. - We can pretty much do “hardcore string searches”, or use regular expressions to extract information from the “HTML string”. But the smarter way is to use an HTML parser, which will make data extraction a lot easier.

P.S. The text and image source is not the only information that can be extracted. Follow up with the BeautifulSoup documentation if you need more, links are below.

EXTRAS

That’s all for the tutorial, and here is a small section on some extras and links that may be useful to you.

LINKS & REFERENCES

THE END

Thank you for reading, and we have come to the end. I hope that it has helped you to better understand, and if you want to share anything with this guide, please feel free to comment below. Good luck and happy coding!